The process fuses the higher-resolution panchromatic band with lower-resolution multispectral bands of a data item from an optical collection, using the Brovey method. The result is a GeoTIFF file with an upsampled spatial resolution.

Most often, optical collections produce the following bands:

- A panchromatic band with a high spatial resolution but a low spectral resolution

- Several multispectral bands with a low spatial resolution but a high spectral resolution

The fusion that pansharpening provides allows you to benefit from the complementary qualities of panchromatic and multispectral bands. The result is a pansharpened reflectance product.

| Specification | Description |

|---|---|

| Provider | Pixel Factory by Airbus |

| Process type | Enhancement from 4 credits per km2 |

Input data items must come from a CNAM-supported collection and be added to storage in 2023 or later.

| Criteria | Requirement |

|---|---|

Product type | Input data items must come from a multispectral collection. |

Spectral bands | Input data items must have panchromatic and multispectral bands. |

You must specify the data items you want to apply the process to.

You must specify the title of the output data item.

You can specify the weights for multispectral bands or use automatically optimized weights by not defining them. Depending on the input data item, the algorithm either uses sensor-optimized weights or, if this data isn’t available, generates new optimal weights.

The weighted Brovey method, applied for this algorithm, uses the following values:

- The pseudo panchromatic intensity. It’s derived from a weighted average of the multispectral bands. Each multispectral band may contribute differently to this average, and the weights reflect their importance in approximating the panchromatic data.

- The real panchromatic intensity. It’s the actual panchromatic intensity data that serves as a reference value.

The output value of a multispectral band is computed as follows:

Use the pansharpening name ID for the processing API.

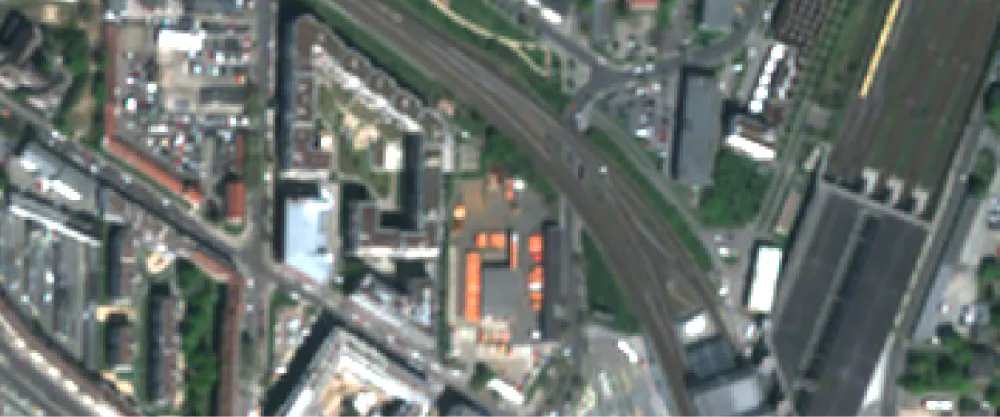

{ "inputs": { "title": "Pansharpened SPOT imagery over Germany", "item": "https://api.up42.com/v2/assets/stac/collections/21c0b14e-3434-4675-98d1-f225507ded99/items/23e4567-e89b-12d3-a456-426614174000", "greyWeights": [ { "band": "blue", "weight": 0.2 }, { "band": "green", "weight": 0.34 }, { "band": "red", "weight": 0.23 } ] }}| Parameter | Overview |

|---|---|

inputs.title | object | required The title of the output data item. |

inputs.item | object | required A link to the data item in the following format: |

inputs.greyWeights | array of objects The weight factors by which spatial details of multispectral bands are scaled.

|

inputs.greyWeights.band | string | required if Required if The name of the band from the STAC asset with the |

inputs.greyWeights.weight | float | required if Required if The multiplication value that lets you modulate the influence of multispectral bands on the final image. The range of allowed values spans from |